Tag: Statistics

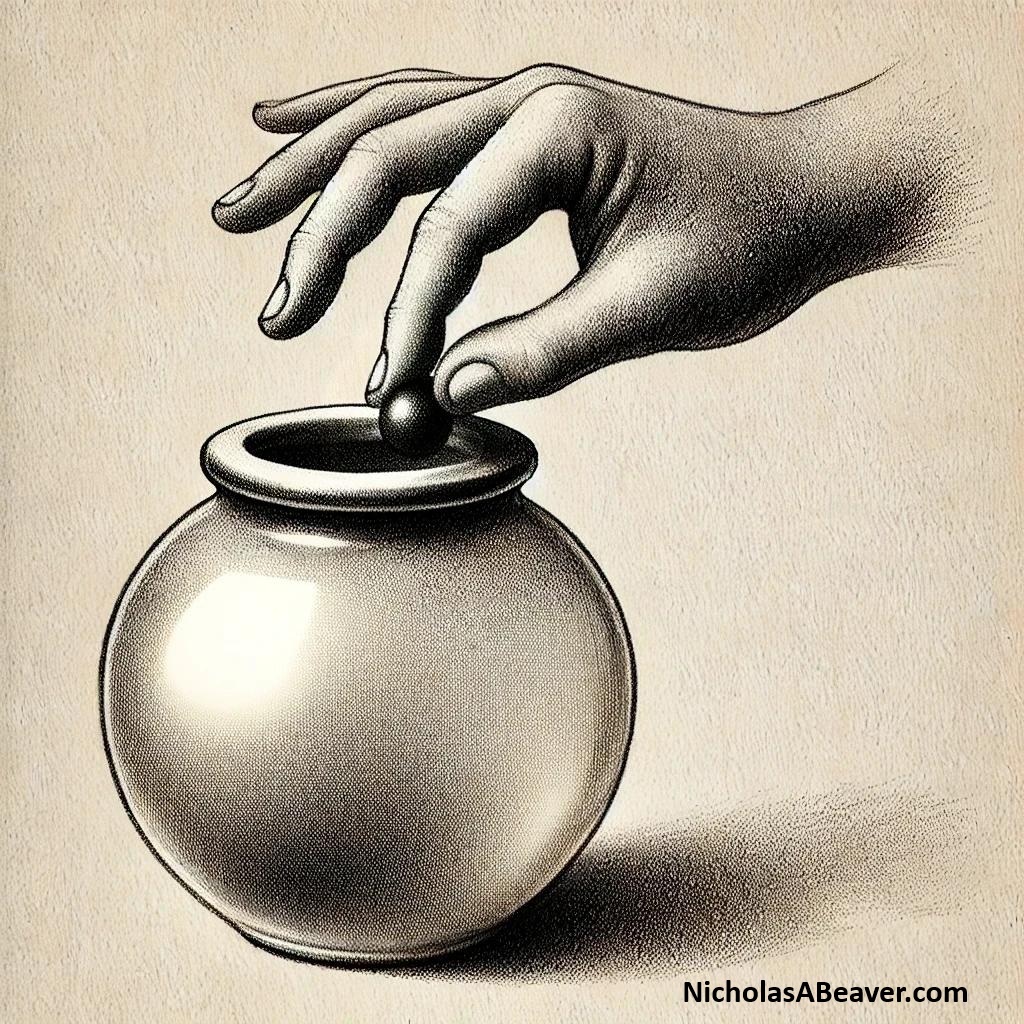

Randomness Does Not Exist An event is random only if it is unknown (in its totality). A state is random if it is unknown. Randomness is thus a synonym for unknown. —William Briggs, Uncertainty: The Soul of Modeling, Probability & Statistics “Randomness” does not exist. It does not exist anywhere. It certainly, then, does not …read more.